The Hidden Tax of Extracts (and How to Eliminate It)

Table of Contents

Picture this: you’ve just built your dream home. It’s modern, secure, and powerful. It has a state-of-the-art security system, energy-efficient HVAC, lighting and water, plus room to grow. To top it off, it has a smart setup that keeps all these modern amenities connected and working in unison.

But then, you decide to sleep in the “starter home” you lived in before because it feels simpler and more familiar. Conversely, that dream home seems intimidating, it’s so new and complex. So, even though your old home lacks the security, efficiency and scale of your dream home, it’s easier to do things “the way they’ve always been done” rather than embracing more modern options.

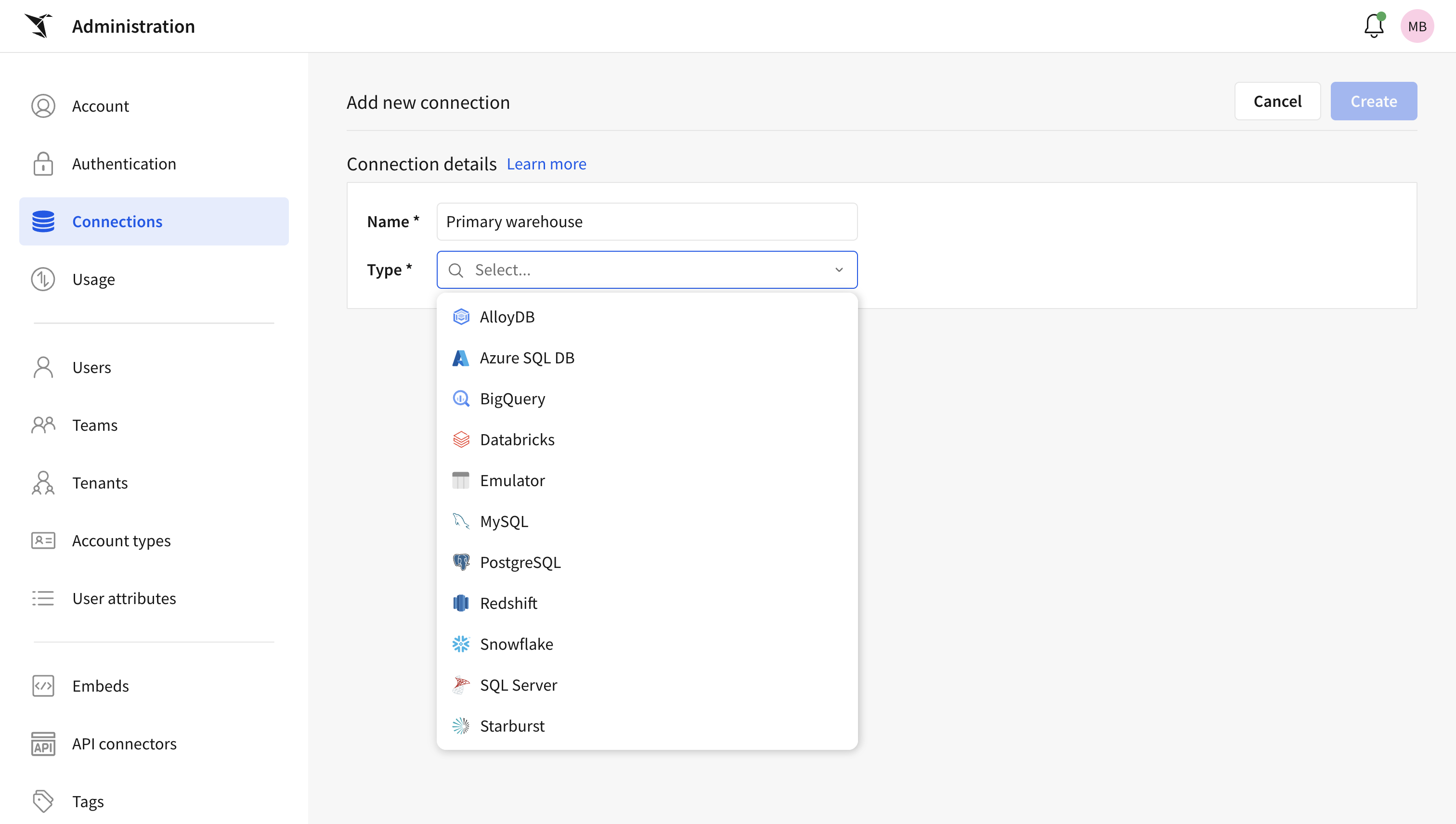

That’s how many teams treat data. They invest heavily in Snowflake, Databricks, Redshift, or BigQuery, then extract the data they need to third-party BI tools for analytics and wonder why decisions lag behind reality.

My stance is simple: move out of your starter home and embrace the dream house. Keep the data in the warehouse, analyze it there, and act there. When you move into the dream home, you stop paying the hidden tax of copies, delays, and drift, and you finally get what you built the house for in the first place: fast, trusted decisions.

The extract mindset is helping no one

Extracts weren’t preference, they were a placeholder for plumbing that didn’t exist. In the on-prem, desktop era, BI had to pull data into its own engines, so extracts became muscle memory. Tableau normalized Hyper; Power BI defaulted to Import (VertiPak) while DirectQuery felt cramped; Qlik loaded data into its associative engine. And that pattern of pulling data out and working elsewhere stuck.

This old-school philosophy has lingered for two reasons.

- Cost folklore: I hear “extracts are cheaper” all the time, because they run one big extract, or a single warehouse query, and everyone hits the copy for “free.” That math ignores reality because scheduled extracts run whether anyone uses them or not, and the copies create governance drag, stale numbers, and multiple versions of the truth.

- Constrained early “live” modes: DirectQuery felt cramped, many tools “checked the live box” but weren’t practical at scale, so teams retreated to imports.

But the dream house has now been built. It’s time to move in.

When your starter home is pricier than the dream house

IT’s biggest fear in shifting away from extracts is runaway warehouse bills from “too many” live queries. But my counter is that the real waste is hidden in massive scheduled extracts that run whether anyone uses them or not.

Here’s what that looks like in practice:

- Idle extracts. Daily, even hourly “just in case” pulls rack up compute and storage, even for content no one leverages

- Long runtimes. I’ve seen 20-hour+ refreshes. That’s insane, and it’s time and credits you never get back.

- Governance breakdown. Copies multiply. Policies set in the warehouse don’t follow the data. Lineage and audit trails fragment.

- Maintenance. Schedules fail. Retries pile up. Numbers go stale. Then someone spends a day (or more) firefighting instead of analyzing.

All this means you’re working with stale data, broken access policies, and lost lineage and auditability. Teams are ultimately making decisions on old or partial numbers, and they have a 0% chance of drilling lower grains of data because the data’s been “right-sized” to fit the extract. Extracts become a source of chaos, not clarity, as multiple versions of the truth and avoidable policy violations become the norm.

Even if live analytics increases query volume, costs don’t have to follow. DoorDash, for example, saw a ~30% increase in queries while keeping Snowflake spend flat with Sigma. That’s the point: keep the work in the house, and you gain speed and trust without blowing the budget.

Welcome to Sigma’s house: Unlocking live warehouse analytics

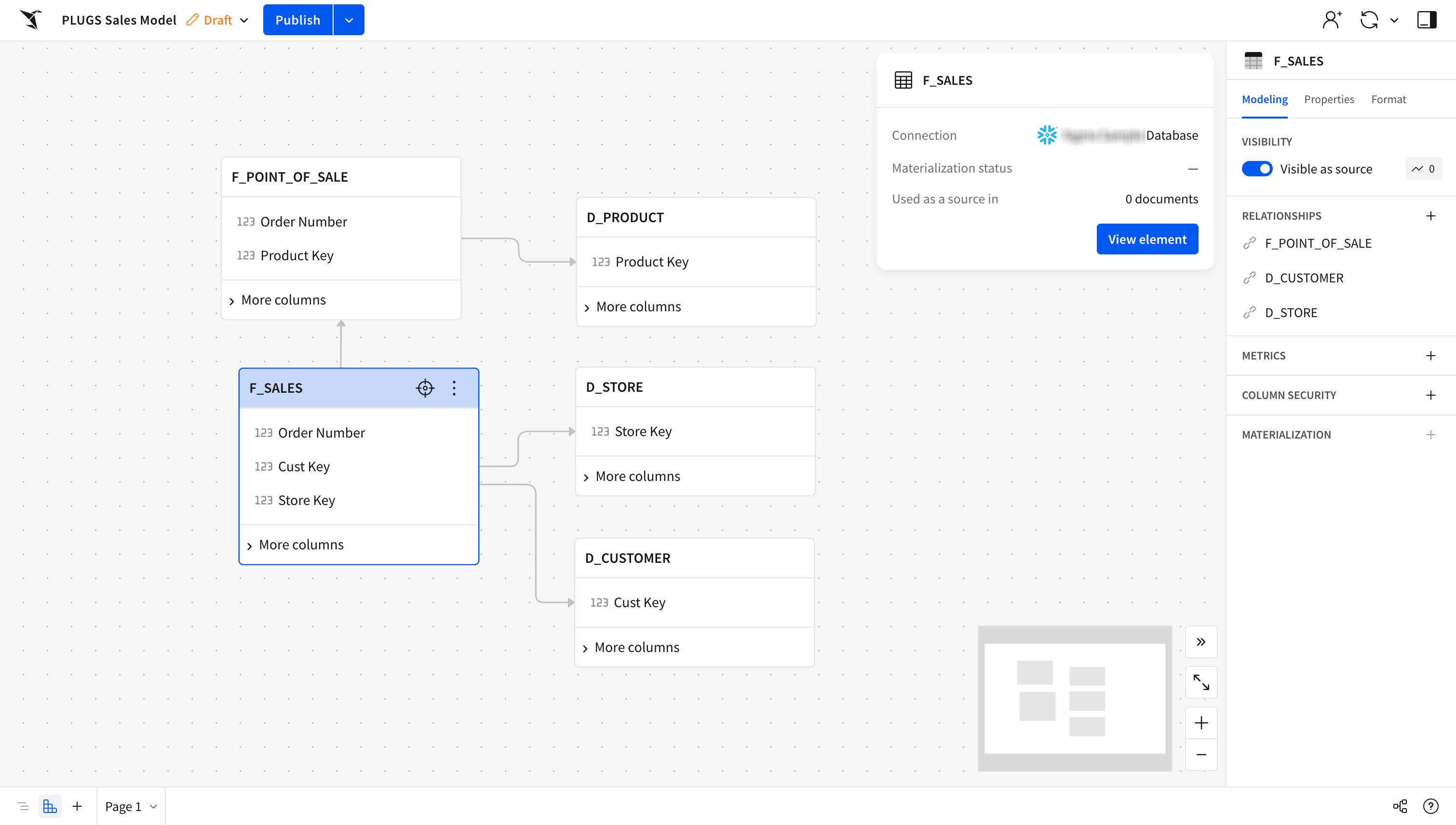

With Sigma, you finally use what you paid for. Live queries ride the power, performance, security, and scale of the warehouse itself. Modern CDW engines are every bit as fast, often faster, than the third-party engines extracts rely on, with one crucial difference: the data never leaves. Warehouse data access policies travel with every query, so the right people see the right rows. The warehouse remains the single source of truth, and decision-makers work with all the rows in a table, not whatever can squeeze into an extract.

There is a great chasm between being built cloud-native like Sigma and being built for on-premises architecture and adapting to the cloud like all of Sigma’s predecessors. Having been built ground-up to leverage cloud warehousing, Sigma’s model enables cost-efficient analytics at enterprise scale, and AlphaQuery is central to that as it lets calculations resolve in Sigma’s metadata when appropriate, instead of hammering the warehouse. Relationship modeling prunes unnecessary joins. Query limits, lazy loading, and sensible row limits prevent accidental blowups. Under the hood, a warehouse-optimized connector leverages results caching and avoids “select-star” style waste. The result is governed, current analytics that scale without lighting up credits.

But wait, there’s more, and this might be the most important differentiation of all: you don’t just analyze in the house. You act there, too. With Sigma, teams write data back directly to the warehouse and run workflows in the same governed environment. No exporting to spreadsheets, no copy-paste into other systems, no email approvals in the dark. Make the update, and it flows through instantly.

Come inside, the door’s open

My two cents: stop investing heavily in data infrastructure only to work outside of it. Live analytics doesn’t have to be expensive when you do it right. In fact, it’s often the waste around extracts that drives costs and delays.

I get that it may feel counterintuitive. After all, you’ve been doing it the other way for a long time so people naturally assume you need an extract layer because that’s the way it’s always been. But that habit is exactly what slows decisions and frays trust. Sigma is built for this cloud world: no extracts, no delays, no nonsense. Just governed, live analytics and writeback in the warehouse that make updates flow through immediately and help everyone stay on the same page.

Come inside and make the most of the dream home you built. If this resonates, start your next analysis in the house and keep it there. Watch our webinar to learn more about how to retire on-prem BI.