How to Curate the Right Context for AI Agents

Table of Contents

As generative AI becomes table stakes in the analytics space, teams expect agents to write complex queries, automate analysis, and take action on the results. The opportunity for agentic analytics is clear to every data leader, but most teams are still figuring out how to move from prototype to production and provide the right context to LLMs at scale.

LLMs are smart. Look at any benchmark for the most recent models and it’s obvious they can perform as well as a human expert, but only when they receive the right information. Insufficient context increases the risk of incorrect outputs, and will halt your AI programs in their tracks.

At Sigma, we focus on curating the right context for AI capabilities built into our platform. We’ll break down the context we use to power agentic analytics and AI apps, and how our customers are moving from prototype to production.

The Missing Layers for AI Agents

Under the hood, all an LLM does is try to predict the next token (e.g letter) in a sequence. While these models perform very well at this and are trained on massive amounts of public data, they don’t have an understanding of your business. They don’t know how a company defines “active customers,” which dashboards leadership relies on, or which tables are considered production-ready. That institutional knowledge is fragmented across your data stack (transformation code, schemas, semantic layers etc.) and human expertise.

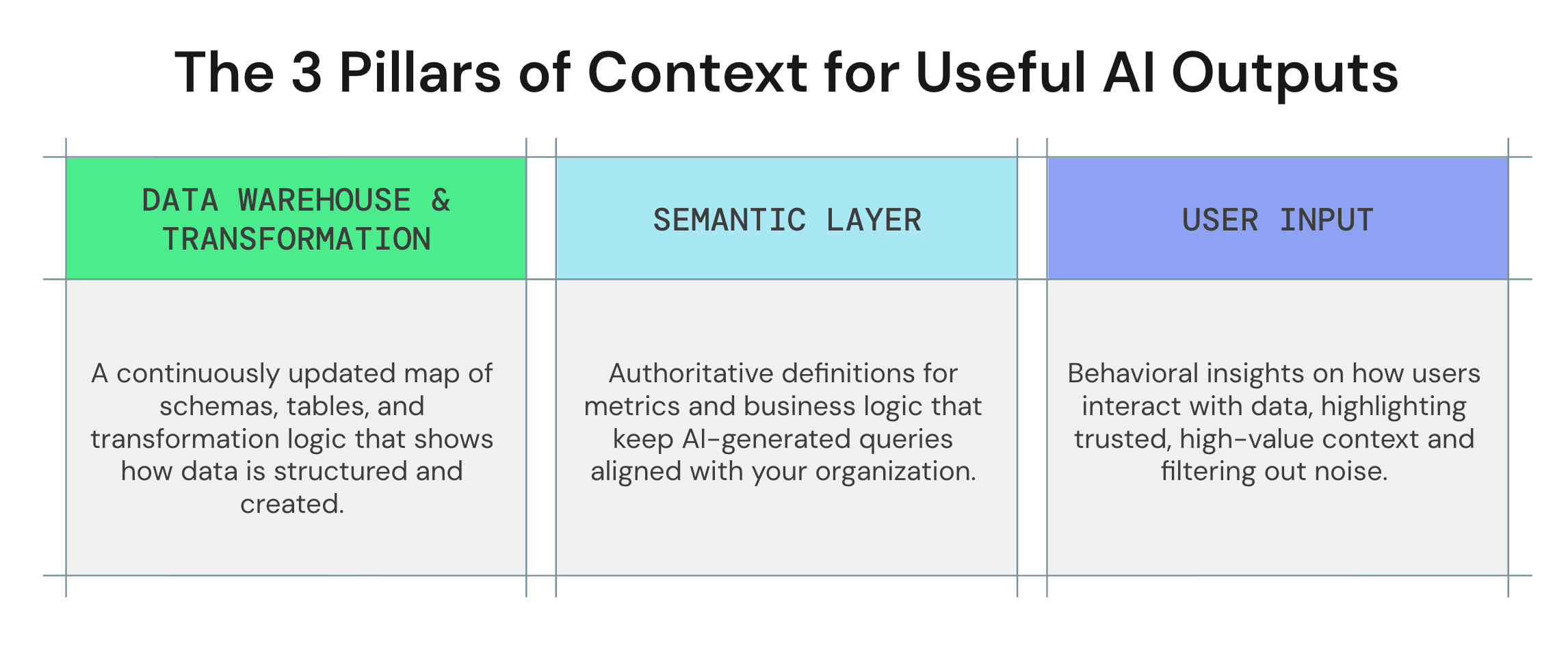

The 3 Pillars of Context

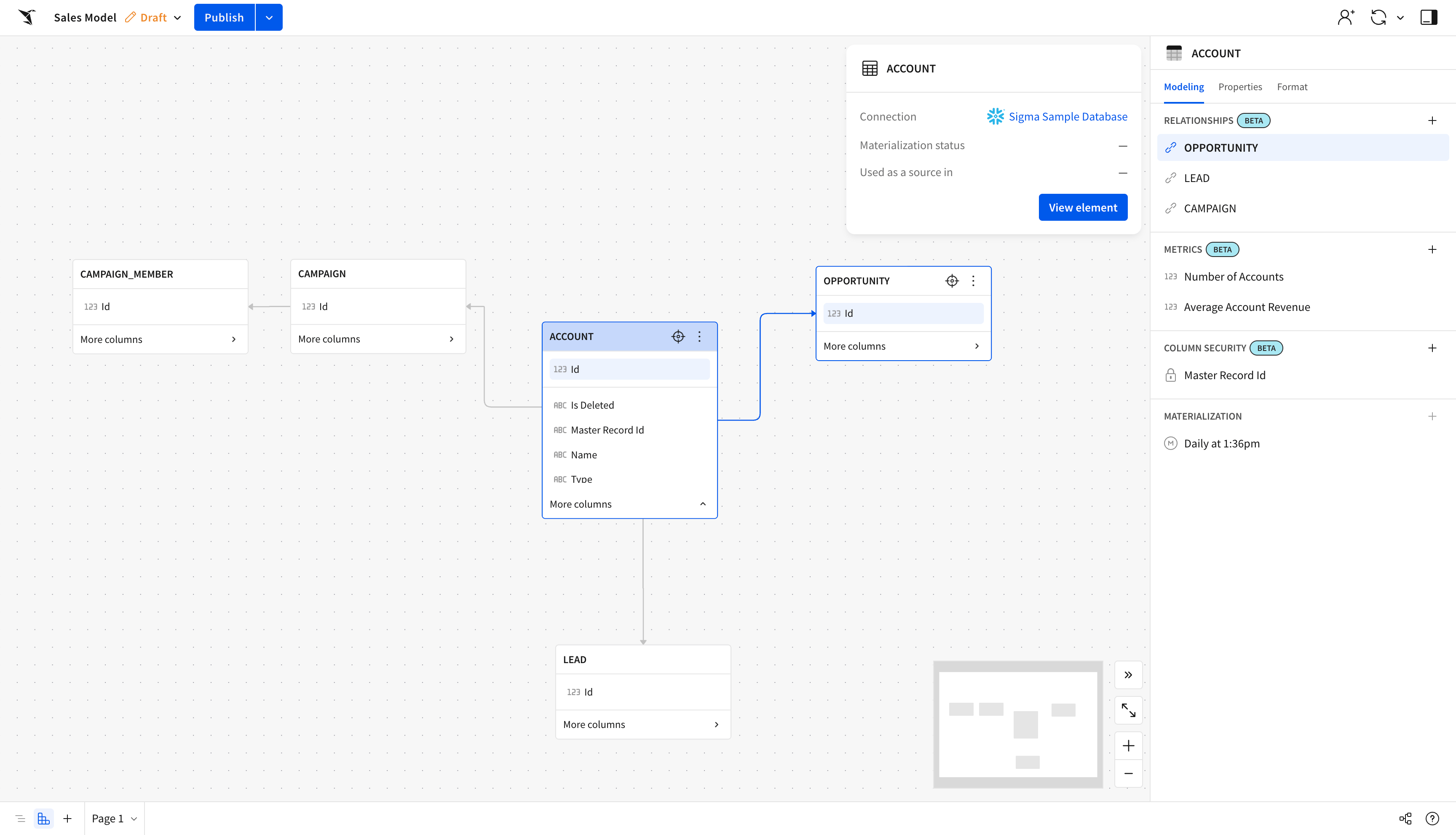

For analytics platforms to deliver useful AI outputs, they need context from multiple sources. In Sigma, this is done by combining three key pillars:

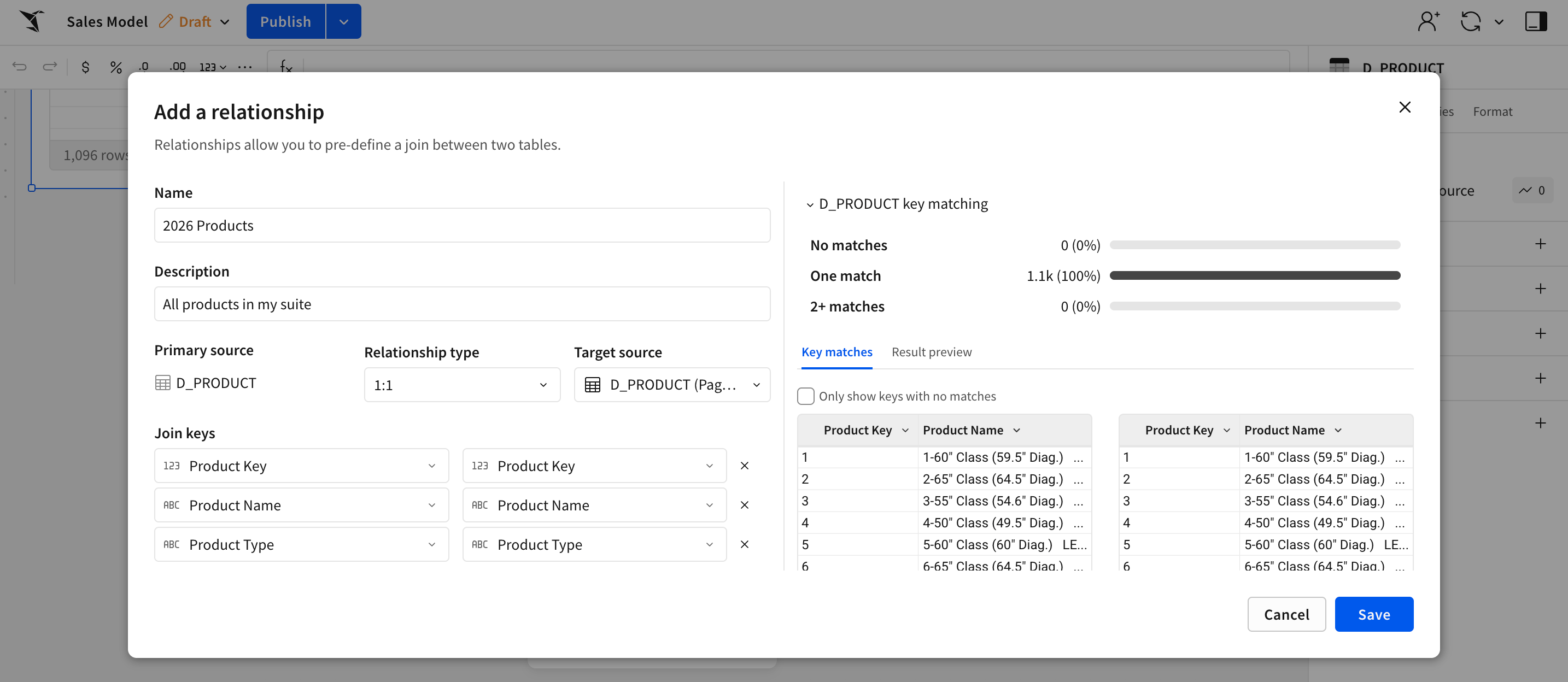

1. Data Warehouse & Transformation

A complete, continuously updated index of warehouse metadata—schemas, tables, columns, lineage, dbt models, and transformation logic—gives agents the technical map of how data is structured and created.

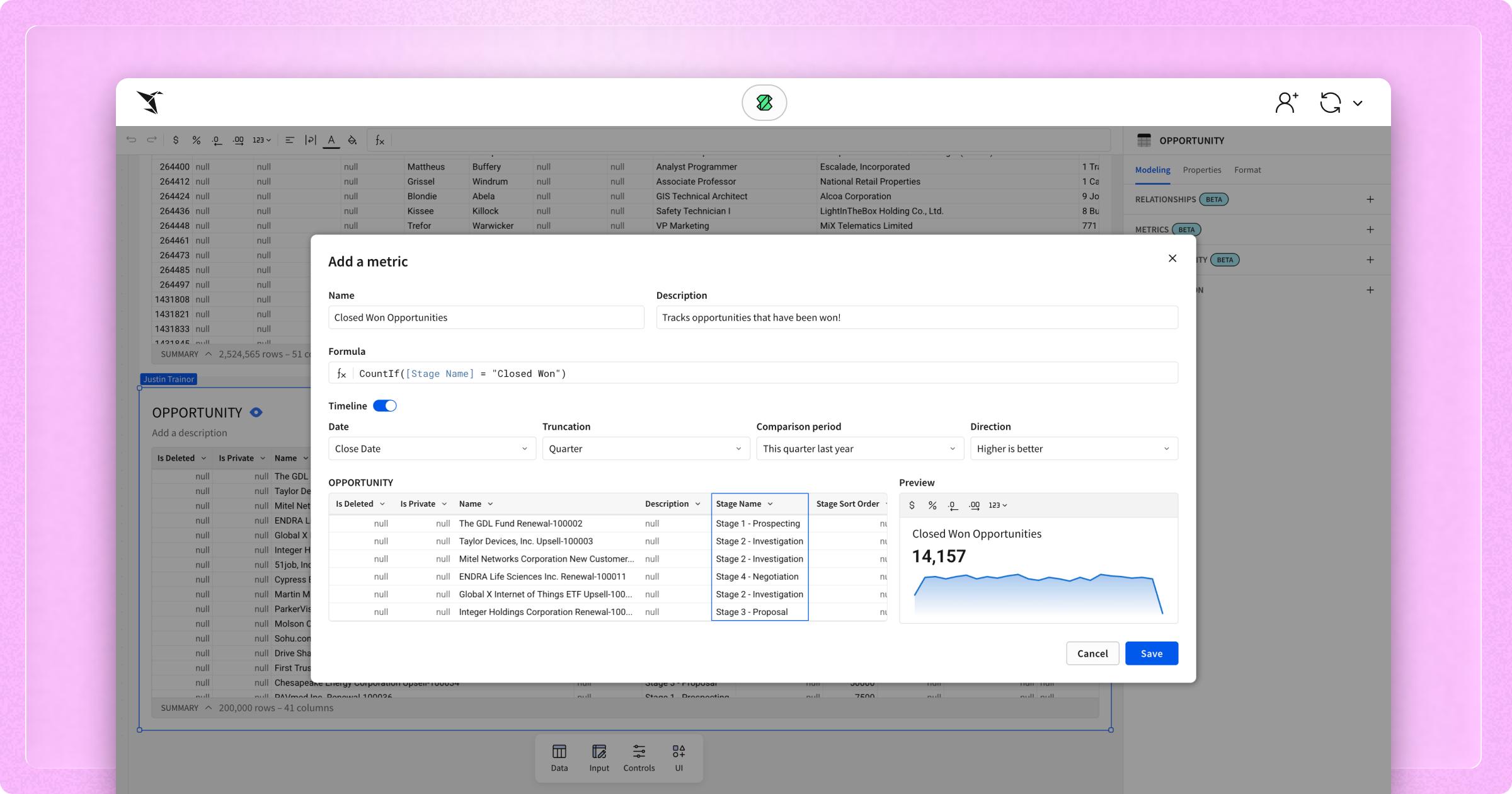

2. Semantic Layer

Integrations with semantic and governance layers like Semantic Views & dbt provide authoritative definitions for metrics and business logic. This ensures that AI-generated queries aren’t just technically correct, but aligned with the organization’s unique definitions.

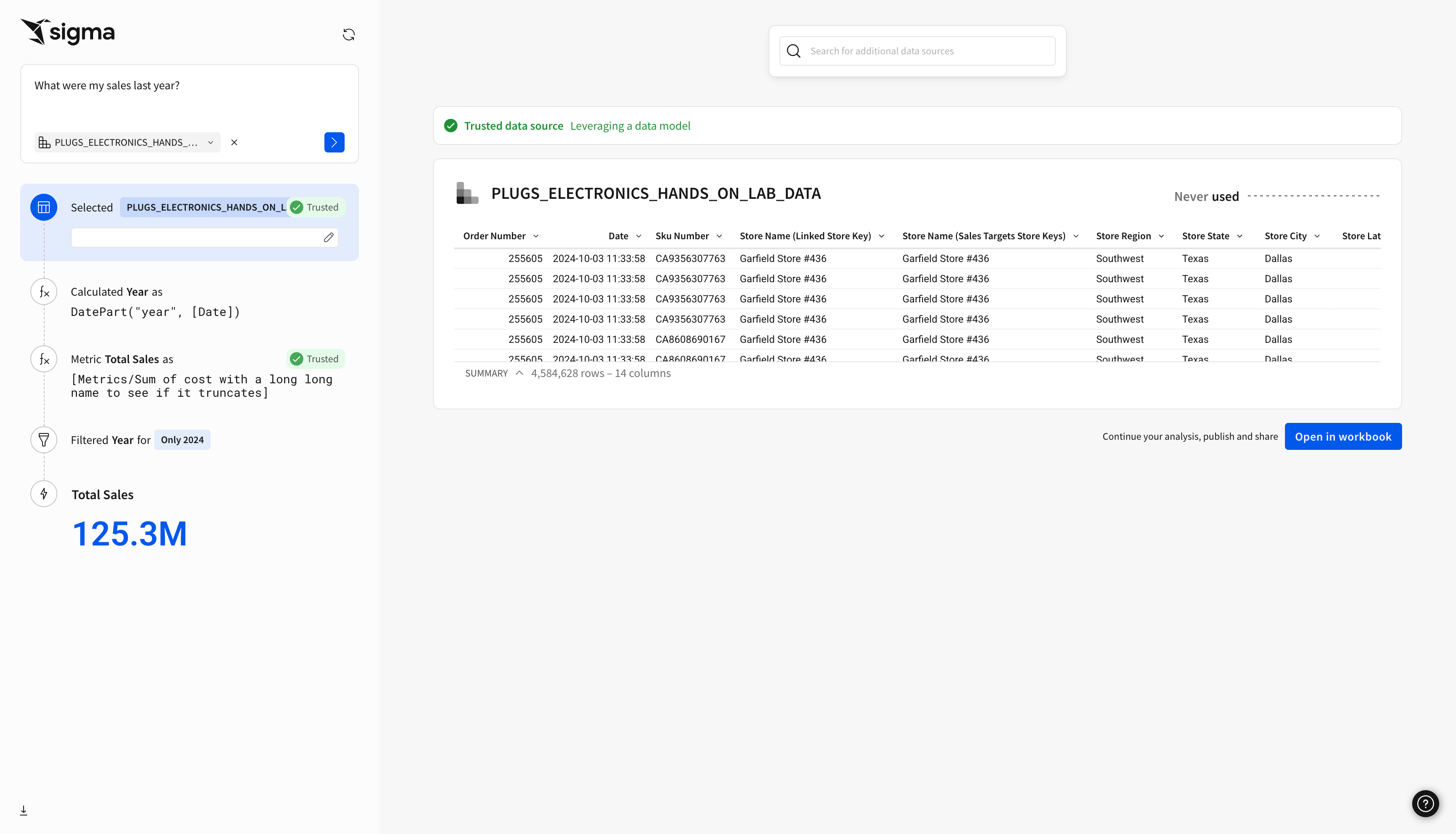

3. User Input

Understanding how users interact with data—what they access, trust, reuse, or ignore—adds a behavioral dimension that models cannot infer from schema alone. This information helps agents surface relevant, high-value context while filtering out stale, unused, or low-quality assets.

When platforms integrate these three context pillars, they can ensure each LLM prompt draws from the most accurate and meaningful information available. Coupled with an interface that teams already use for analytical workflows, this approach supports smoother adoption and more reliable outcomes from AI-driven systems.

Why Context Matters

The most effective AI in analytics does more than just interpret SQL; it captures the business logic around it. By keeping users and workflows inside Sigma, where assets are created and actions are taken, we're providing richer context and more accurate outputs without requiring users to work directly in the warehouse.

A robust context layer should be built from four continuously refreshed sources:

- Data Warehouse Metadata: Full visibility into schemas, tables, columns, types, and descriptions provides the structural foundation for accurate query generation.

- Transformation & Lineage: Data from the transformation layer gives agents a clear view of how data was created and prepared, ensuring they rely on trusted, production-ready sources.

- Semantic & Business Logic: Connections to semantic layers and data models supply definitions for metrics, joins, and governance rules that keep AI grounded in business reality.

- Usage Analytics: Behavioral signals show which assets users trust and rely on most, guiding the agent to good context and filtering out irrelevant or experimental data.

Together, these context sources give an analytics platform a more complete view of both the underlying data and the business logic that governs it. The result is AI-generated outputs that are more accurate, consistent, and aligned with the decisions people actually need to make.

Path Forward: From Analytics to Intelligent Applications

The next generation of enterprise applications is grounded in trustworthy data, governed semantics, and human behavior where AI becomes an active collaborator in every analytical workflow.

Want to learn more about how context elevates AI from answering questions to driving workflows? Check out our latest product launch and see how Sigma’s contextual AI layer powers interactive, data-driven applications, turning plain-language ideas into fully built, governed outputs with AI Builder (private beta).