Introducing RADAR: The New Standard For Proactive Support

Table of Contents

Support doesn’t start with a ticket anymore. It starts with a signal in Sigma.

That’s where RADAR comes in—our system for Rapid Anomaly Detection, Analysis, and Response. It helps us spot friction early and act fast—sometimes before customers even realize there’s a problem.

RADAR monitors real-time experience data, identifies meaningful disruptions, and alerts our team with the context they need to reach out, fast. It’s not just early detection. It’s precision support, delivered when it matters most.

One dashboard to crash them all

It started with a simple workbook update. One of our customers, a car reconditioning business, had dashboards displayed on TVs across their centers—auto-refreshing, always on. But after an end-of-week publish, those dashboards quietly stopped using a materialization and began hammering the warehouse with very expensive live queries, which their warehouse was not sized to handle. Great way to start a weekend, right?

By Saturday morning, the cloud data warehouse was overloaded. Underwriting agents couldn’t do their jobs. The business ground to a halt. It looked like a DDoS attack—but it came from inside the house. And no one realized until it was already too late. It was the perfect storm.

Avoiding this incident became our North Star. While we could piece it together after the fact, we wished we could have seen it coming. The question that kept picking at our brains was: how can we get ahead of this stuff and be proactive instead of responsive?

‘Cause you might have had a bad day—and Sigma turned it around

Most support teams wait for a customer to call with a problem. We’d rather catch the issue before customers even think to pick up the phone.

That’s the thinking behind RADAR. It doesn’t just look for failures—it looks for impact. Who’s actually having a bad day? How many users are affected, how many workbooks, how often, and where? With RADAR, we go beyond raw error counts to surface the context that matters: when something started, how it’s evolving, and what workflows it’s disrupting.

Most support teams wait for a customer to call with a problem. We’d rather catch the issue before customers even think to pick up the phone.

That context is key. And in a world full of alerts, we want to be the one customers pay attention to. That’s why we only reach out when there’s something worth acting on—and while most companies monitor errors by system and server, we’re now monitoring by customer as well.

Here’s what that looks like in action:

- We spotted a spike in query timeouts. The customer traced it back to warehouse contention and killed the competing load—problem solved.

- We flagged errors from a warehouse view change that silently broke downstream workbooks. Our heads-up helped the customer notify the right team before users even noticed.

- We caught a permissions glitch tied to bookmarks in embeds and escalated it straight to our engineering team, fixing an issue before our customers’ users became aware.

- We saw behavioral drift from a cloud warehouse rollout that only affected a handful of customers. Because we caught it early, we worked with the vendor to minimize broader impact.

No matter the root cause, our mindset stays the same: this customer is in pain. Let’s help. RADAR scores and quantifies the risk, and gives our team the tools to act fast and precisely.

It’s not just proactive support. It’s personalized, context-driven intervention—before anyone files a ticket.

How we built a system that sniffs out trouble

RADAR didn’t come out of nowhere. It stands on the shoulders of three key pillars—our tracing infrastructure, Sigma’s powerful modeling capabilities, and a custom scoring system that helps us prioritize what matters.

No matter the root cause, our mindset stays the same: this customer is in pain. Let’s help.

The tracing infrastructure: RADAR’s superpower

Before RADAR could detect anything, we needed to see what was happening—clearly, consistently, and in real time. That’s where Sigma’s tracing infrastructure comes in. And it’s one of the biggest reasons RADAR works at all.

When I joined Sigma, I was blown away. After years of wrestling chaotic logs—regexing through debug prints, zipping and unzipping phone-home files, scraping together meaning from on-prem servers and sprawling cloud storage—this was something else entirely.

Instead of a mess of logs stitched together after the fact, Sigma’s engineering team had already built a structured, elegant system. Everything was being logged in JSON, stored in an object store, and ETL’d into a cloud data warehouse in real time. Not thousands of scattered tables. Not one table per log line. Just a clean, normalized model.

At the center is a fact table called EVENTS, capturing metadata (but not customer data) around everything that happens across the product. Around it, dimension tables—users, workbooks, connections—add rich context. Together, this creates a complete, queryable view of system behavior, fully attributed to real users and real workflows.

What used to take days of forensic guesswork is now a simple Sigma table with filters on it.

That’s the magic. It’s the foundation RADAR is built on. It gives us the raw signals we need—clean, connected, and ready to analyze. What used to take days of forensic guesswork is now a simple Sigma table with filters on it.

Most companies spend years trying to build this kind of observability. Sigma had it from the start. And it’s what lets us do something most systems can’t: detect pain, not just failure—and know who it’s affecting, where, and why.

Turning signals into stories with Sigma’s modeling capabilities

With high-quality event data flowing into the warehouse, the next step was turning that data into insight—specifically, understanding which customers are having a bad day, and why.

We started by focusing on errors, asking a deceptively simple question: who’s actually being impacted? That means looking at context, not just volume. For each error, we consider:

- How many unique users are affected?

- How many documents or dashboards are involved?

- Does this have a downstream impact on our customers' users?

- Which part of the workflow is impacted—creation or consumption?

- Was it triggered while a user was viewing a published workbook, or just editing it?

- Is this a recurring issue, or something new?

That’s how we separate background noise from real disruption.

But we also bring in historical context. For each customer, we extend our view across the last 7 days, then compare today’s error frequency to their historical norm. That helps us deprioritize noisy errors that happen all the time, and zero in on unusual spikes.

Next, we calculate denominators—total queries and distinct users per day—so we can compute error rates, not just counts. These models started as exploratory builds inside Sigma (and yes, the JSON parsing was heavy), but once we validated the logic, we moved it into dbt and Snowflake’s dynamic tables. Now, the core event pipeline can surface an issue within five minutes—though today, we’re running the full RADAR workflow on hourly intervals, mostly due to ticketing and human-in-the-loop constraints.

Now, the core event pipeline can surface an issue within five minutes.

From there, it’s just a matter of aggregation. To answer “who’s having a bad day today?” we group and calculate:

- Total evals and distinct users per customer

- Error counts and their percentage of total evals/users

- Number of impacted workbooks

- Context flags: embed, export, edit

- Max prior-day error counts (to spot spikes)

This model is the heart of RADAR—it doesn’t just show us that something’s wrong, it shows us why it matters.

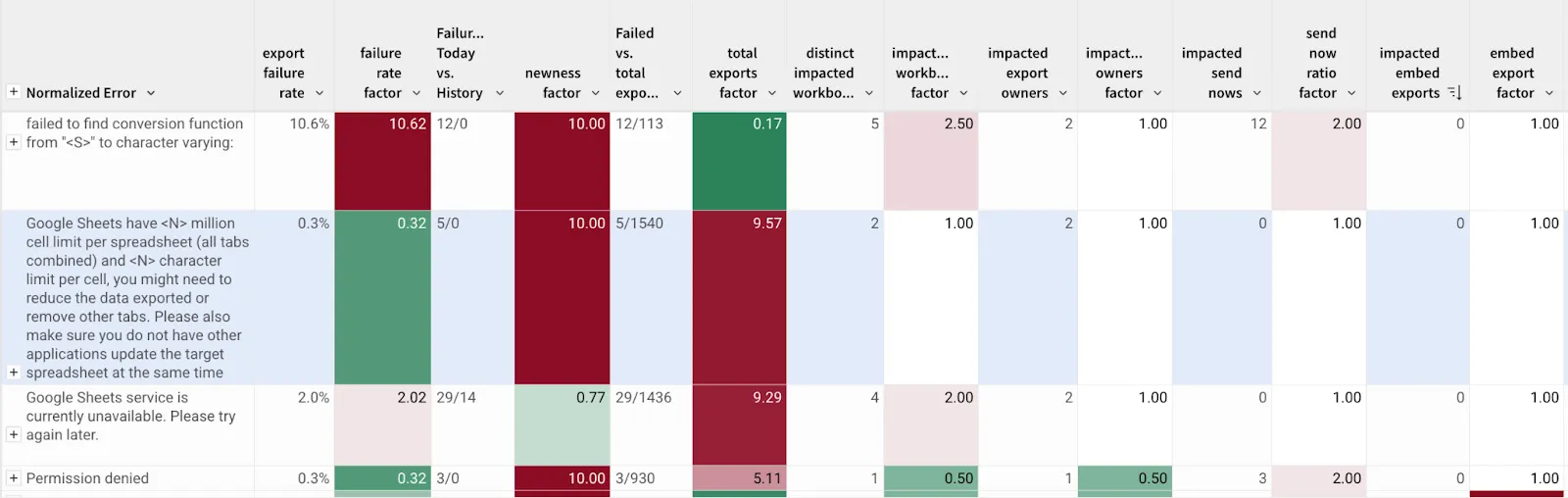

Figuring out what hurts most with a custom scoring system

By this point, we had a stream of well-modeled signals coming in—counts of errors, users impacted, exports broken, embeds affected. Now we needed a way to combine them and answer a key question: Is this a real problem, or did someone just press the wrong button?

The first scoring pass was mostly math. Multiplication. Division. A set of tunable weights based on context. For example, embed users might count 10x more than standard users; or errors affecting exports got a 100x multiplier. Unsurprisingly, these calculations produced some comically large numbers. Scientific notation became our friend.

We eventually landed on a threshold for action: 1e15—yes, that’s one quadrillion. If something crosses that line, it’s worth a human investigation. But we didn’t just set it and forget it. As more “this should’ve been on our radar” incidents surfaced, we kept calibrating—refining the scoring algorithm to cut noise and highlight the problems that actually matter. We also embarked on a normalization effort, bringing each scoring factor into a consistent range (say, 0.1 to 10) so the resulting scores are more interpretable for humans, not just machines.

Periodically, the system sends a report. If any customer’s error score exceeds 1e15, it sends an alert to a dedicated Slack channel monitored by our TSEs. From there, someone steps in to investigate and reach out.

The TSE can then add any additional context uncovered during investigation and send it out in a support ticket—fast, precise, and personalized.

The outreach itself is powered by dynamic templates that auto-fill with real data:

“This error is impacting 18% of your users and 12 workbooks. It started this morning and appears tied to a scheduled export.”

The TSE can then add any additional context uncovered during investigation and send it out in a support ticket—fast, precise, and personalized. At this scale, timing isn’t just helpful. It’s the difference between an inconvenience and a full-on outage.

How we made these scores behave

This version of our scoring system did exactly what we needed—it surfaced real, high-impact problems and helped us reach customers before they even realized something was wrong. It worked so well, we were quickly seeing response rates over 30%.

But success came with a scaling problem.

Once we set the 1e15 threshold, it became harder to introduce new scoring factors. Every new multiplier pushed the numbers up—and suddenly everything was breaching the threshold, whether it deserved to or not.

This gave us a far more stable and interpretable system—one where each factor contributes meaningfully without distorting the full picture. We rolled this out first for export errors, and it’s already improved how we track and visualize pain. This normalization also made our scores easier to visualize. We now render the weights as a color scale, so we can see at a glance which factors are driving urgency—and which ones are just noise.

We’re just getting started

RADAR has already changed how we think about support—but this is only the beginning. As we continue investing in this system, we’re focusing on two core areas of improvement: coverage and communications.

Coverage: From errors to performance pain

So far, RADAR has focused on clear failure signals—errors in the warehouse query path, issues in the embed layer, and most recently, failures in scheduled exports. But not all pain shows up as an error.

In fact, poor performance often hurts more than outright failure. Long load times. Latency spikes. A dashboard that technically renders, but takes 45 seconds to do so. That kind of experience drags down trust—even if there’s no red error message.

So, we’re now working on applying RADAR’s context-aware approach to performance and warehouse spend. Using the same modeling techniques—user impact, historical baselines, embedded usage—we want to highlight when users suddenly start experiencing unusually poor performance.

We're also looking at how materializations are (or aren’t) being used. When leveraged properly, they’re key to delivering fast, efficient workbooks. When misconfigured or forgotten, they become silent cost and performance liabilities. We already monitor for unused materializations and pause them after 60 days of inactivity. Soon, we want to go further—flagging situations where materializations aren’t being used as expected, and guiding teams to make better decisions around performance and cost.

Communications: Moving RADAR into the chat layer

Right now, RADAR alerts are sent via our email-based ticketing system, while most real-time support happens in our in-app chat. That’s changing.

We’re migrating RADAR communication into the same chat system we use for live support. It now includes ticketing functionality, which allows us to dispatch and manage RADAR alerts without leaving the chat interface. That means faster handoffs, tighter context, and a more unified customer experience.

Even better, we’re using this migration as a chance to dogfood our own data apps capabilities. Much of the RADAR workflow—ticket creation, triage, initial communication—will be driven from Sigma workbooks via API.

A new kind of support

RADAR gives us a new superpower: the ability to detect real, business-impacting pain—before it snowballs.

It works because of the infrastructure Sigma invested in from day one: clean, structured logs flowing into a warehouse, enriched with product metadata, and made explorable in real time. That foundation lets us connect issues to specific users, workbooks, and workflows—and respond with precision.

RADAR gives us a new superpower: the ability to detect real, business-impacting pain—before it snowballs.

Most observability tools can’t do this. They’re built to monitor infrastructure, not user experience. RADAR flips that script—turning usage data into context, and context into action. And we’re just getting started—extending coverage into performance and cost, and streamlining how RADAR runs behind the scenes—bringing it in line with the real-time responsiveness of our chat-based support.

Because no one should have to file a ticket to get help with a problem we can see coming.