How I Use AI Agents To Eliminate Hours Of Repetitive Data Work

Table of Contents

As an Analytics Engineer, I used to spend entire afternoons doing the kind of work that doesn’t need your brain, just your time. Things like updating column definitions, or copy-pasting documentation, or manually tracing lineage through ten different files. None of it was hard. It was just... repetitive.

Then I tried an AI coding assistant. I gave it some basic context, hit return, and watched it do what normally took me three hours in under two minutes. That’s when it clicked: this isn’t about replacing anyone, but about finally having a tool to skip the boring parts. It’s about making space for work that enables AEs like me to work on projects that require an understanding of the business context and strategic planning.

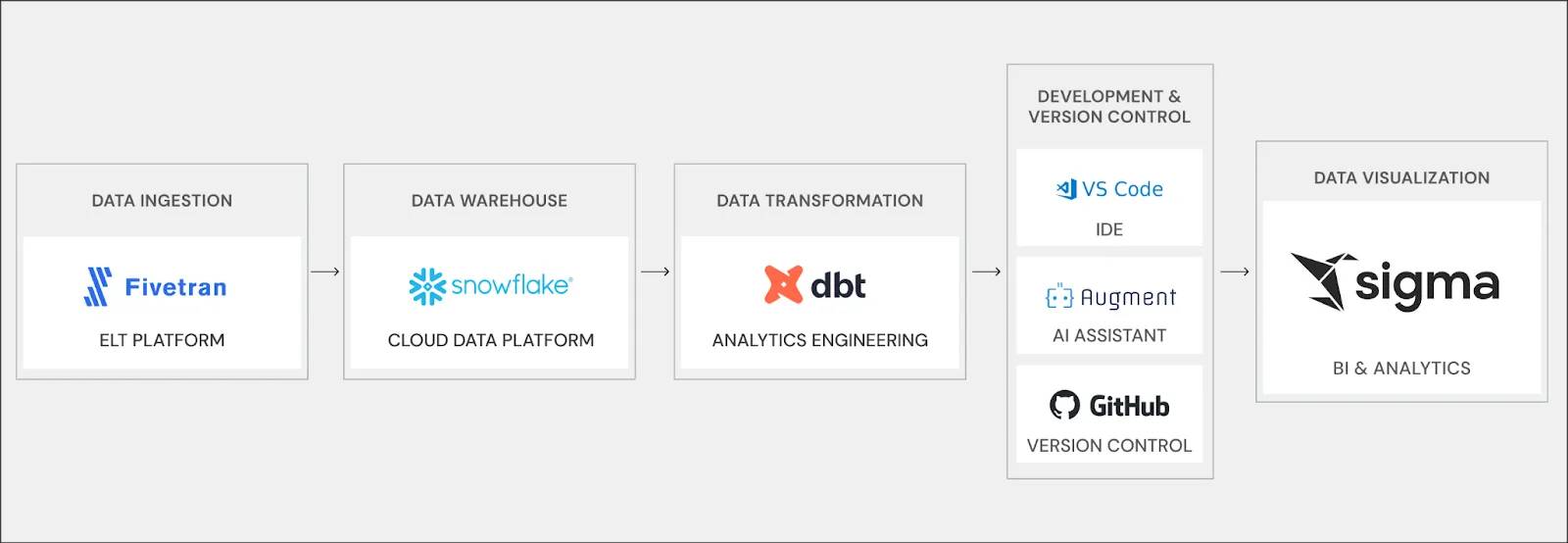

If you’re in data and wondering where AI fits into your day-to-day, this is what it’s looked like for me: the tools I use, what’s changed, and why I think this shift marks a turning point for us all.

Productivity, not just product: How AI is reshaping my day-to-day

Right now, in the data world, AI is showing up in two big ways. First, companies are trying to build it into their products, which is obviously important. But second, and maybe more immediately useful, is how individual people are using AI to make their own work more efficient.

Especially on smaller teams, where everyone’s wearing five hats, the focus is on personal and team productivity. For me, it started with tasks I knew were tedious: things like adding new columns that the engineering team had introduced into the database, then mapping them across multiple files in our production codebase. You had to manually update the field, add the right definitions, and make sure the descriptions were clear, over and over again. That would take me 2–3 hours. Now, with coding assistants like Augment, I give the model some basic context and it’s done in under a minute.

Right now, in the data world, AI is showing up in two big ways. First, companies are trying to build it into their products, which is obviously important. But second, and maybe more immediately useful, is how individual people are using AI to make their own work more efficient.

It’s not just faster, it’s cleaner. There’s less room for human error, and fewer missed steps. I also use it to debug. I’ll drop in the error I’m seeing, ask why it might be failing, and half the time the fix is right there.

And it’s not just about technical execution either. I use Claude and ChatGPT as thought partners—less “do this for me” and more “poke holes in my logic” or “help me think through edge cases.” It’s like having someone to bounce ideas off of when you’re stuck. Just faster.

Turning transcripts into product gold with LLMs

That same idea of using AI as a thinking partner has carried into some of the more complex projects I’ve been working on, too. One example is a transcript analysis tool we’re building using Snowflake Cortex.

Every time a user messages our technical support team, that interaction gets logged. These transcripts are full of insight: what people are struggling with, which features they love, where they’re confused, and what bugs they’ve found. But no one has time to read through 50,000 words of raw conversation. So we started using Snowflake Cortex—an LLM function that lets you run structured prompts directly in your warehouse.

We’d feed it a transcript and say: Tell me the main question. What solution did we give? Was this a bug report, a feature request, or just an FAQ? And the model would summarize it in seconds. Suddenly, we had a way to surface trends by product area, flag recurring issues, and even loop the right info back to the product team. One transcript becomes insight, and a thousand become a roadmap.

The value of human analytics engineers really comes in, not in the coding itself, but in understanding what to look for, how to ask the right questions, and how to turn data into action.

What we’re doing is not just summarizing transcripts, but closing the loop between support, product, and customer success. And that’s where I think the value of human analytics engineers really comes in—not in the coding itself, but in understanding what to look for, how to ask the right questions, and how to turn data into action.

It’s not plug-and-play—it’s learn, adapt, and evolve

If there’s one thing I wish more data teams understood, it’s that AI agents aren’t magic. LLMs hallucinate… A lot. You can’t just plug one in and expect instant productivity gains. You have to learn how these tools think—what context they need, how to structure prompts, how to iterate. Early on, I’d get answers that looked right but didn’t actually save time because I had to redo the work. That defeats the purpose. The real value comes when AI removes the friction, so you can skip the busywork and focus on the parts that actually move things forward.

If there’s one thing I wish more data teams understood, it’s that AI agents aren’t magic.

And that’s exactly why I think the role of the analytics engineer is going to change. It’s not going to just be about the technical aspect anymore. AI agents are going to be much better code writers. They have more memory, more processing power, and they’re only going to get better.

Where we’ll continue to have the moat is in business context—understanding what the company actually needs, what’s happening in the market, where the product is going. That’s the kind of strategic thinking AI can’t do because it doesn’t have that full context. It can’t think forward.

Jake Hannan actually wrote a piece about this shift: Analytics Engineers, It’s Time to Pick a Side. I couldn’t agree more.

That’s the future—and it’s already here.