What 415,000 App Store Reviews Tell Us About How People Actually Use Gen AI

Table of Contents

When Harvard Business Review published “How People Are Really Using Gen AI in 2025,” it framed the dominant use cases as things like companionship, advice, and emotional support. That framing got a lot of attention—understandably. Stories about people forming relationships with LLMs are sticky, provocative, and easy to headline.

But something didn’t sit right with me. I’ve seen countless stories about people using ChatGPT for companionship; those stories travel well. In my own work, though, those use cases have always felt like the outliers compared to the sheer volume of people using these models to write marketing copy, study, research, or review code. I wanted to stop speculating about that gap and actually measure it.

Go to the least-performative signal

So I went to the source: the raw, messy, deeply human voices in app review sections. These aren’t Reddit threads where people post for upvotes or internet points; they’re written for the developer (and sometimes other potential users) as the audience. People vent, praise, and make feature requests directly to the company. That dynamic strips away a lot of the social-performance bias you see in public forums.

And that context matters. As headlines about “AI psychosis” and AI relationships pile up and as OpenAI, xAI, and Meta keep pushing LLMs into more corners of everyday life, we need to know how common these uses really are. All the while, governments are fast-tracking AI adoption: pouring money into data centers and energy, lowering the barriers to using copyrighted material. Decisions are being made quickly. The ground truth needs to move just as fast.

Build a behavioral rubric, not vibes

The methodology was intentionally straightforward. I pulled reviews from the last 16 months for seven leading apps: ChatGPT, Claude, Gemini, Grok, Deepseek, CharacterAI, and Replika. I broke results out by app because, after too much time on Twitter and Reddit, I already knew people gravitate toward certain models for specific jobs.

- Claude tends to draw a professional, engineering-heavy crowd.

- ChatGPT has deeper tool use and broader everyday applications, much like Gemini.

- CharacterAI and Replika are in a completely different lane, built around companionship from day one.

That separation turned out to be important.

Why app reviews? Two reasons:

- Scale. Millions of publicly available data points in one place.

- Candor. The audience is the developer, not an anonymous crowd. That means the reviews are more direct, raw venting, praise, and specific requests, without the “look at me” energy you see on public forums.

We started with around 1.2M raw reviews, but since a single review could fall into multiple categories, this meant that we ended up with 415,000 that tied directly to individual categories. With ChatGPT’s help on the first pass, we applied a process that was more about behavior than feelings:

- Theme mining → open coding:

- Found repeated phrases, then tagged a seed set by hand to separate actual “use behaviors” from generic opinion.

- Consolidation:

- Grouped overlaps into seven primary categories — Entertainment, Companionship, Knowledge Access, Learning, Creativity, Productivity, Technical Work — each with its own subcategories for more granularity.

- Cross-model peer review:

- Checked overlaps and blind spots against Claude/Gemini-generated frameworks.

- Rubric design:

- Assigned numeric labels (100–700) for each category and subcategory and confidence scores (HIGH, MEDIUM, LOW) for clarity.

- Prompt engineering and few shots:

- We created a prompt that we could pass into an LLM to reliably categorize a few hundred reviews

- Automation pipeline:

- Taking our prompt and running it in bulk against ChatGPT, categorized the reviews at scale.

What 415,000 reviews actually say

Looking at all seven apps, Entertainment and Companionship are top use cases. “Entertainment” covers anything done for fun, curiosity, or downtime. “Companionship” is when the AI serves as empathy, advice, or social connection. That mostly tracks with HBR, but it’s not the whole picture.

Here’s where it gets interesting: CharacterAI and Replika are designed for relationships. If you take them out of the mix, the order changes dramatically. In the rest of the set, ChatGPT, Claude, Deepseek, Gemini, Grok — Companionship drops to #4 and Entertainment to #5. Knowledge Access and Learning jump to the top, with Productivity in third.

That tells me two things. First, depending on which apps you look at, you can tell wildly different stories about “how people use AI.” Second, outside of purpose-built companion tools, most people still use these models for exactly what they were intended to do: answer questions, teach, and help with work.

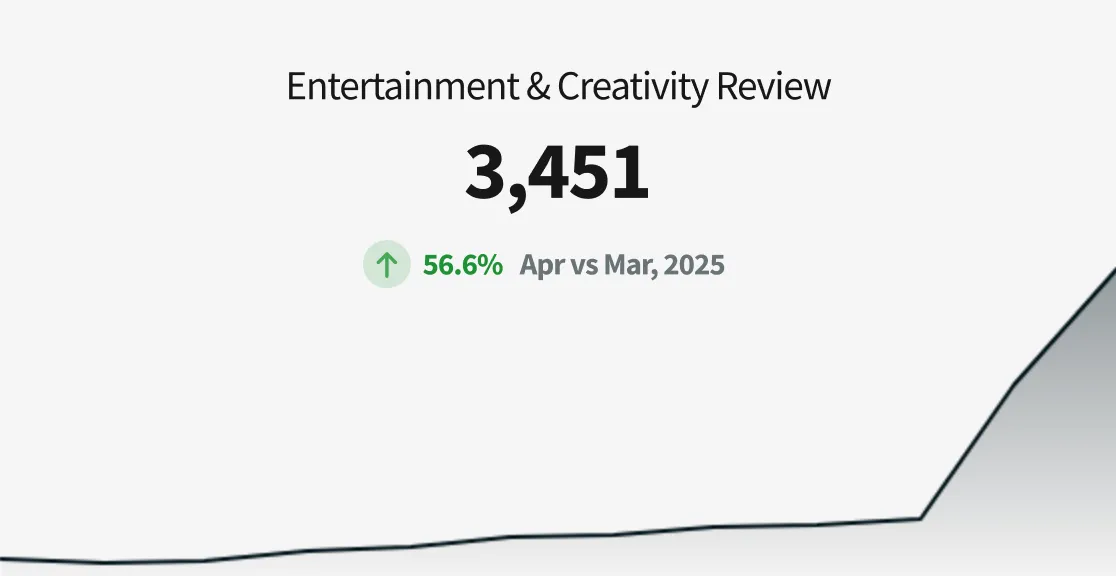

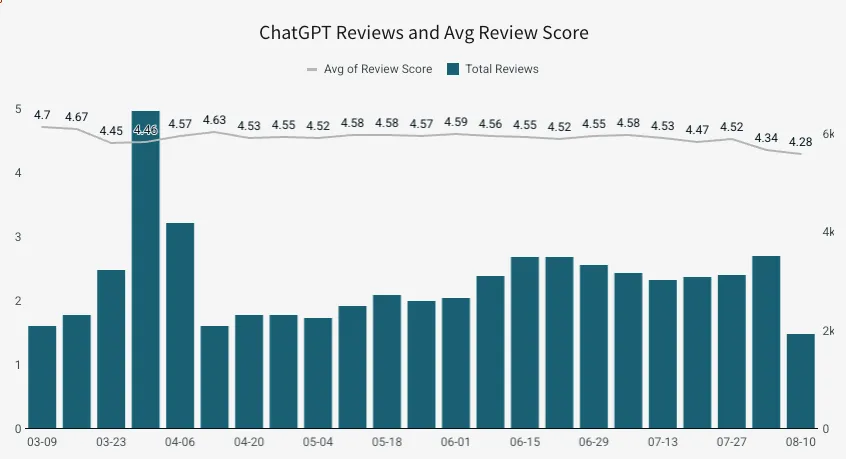

There are spikes, though. ChatGPT usage for entertainment shot up during the Studio Ghibli trend — people prompting it to draw, imagine, or spin stories in a specific style. It’s a reminder that cultural waves still drive bursts of activity that look nothing like the day-to-day baseline. And those waves create spillover expectations: reviewers for Grok and Gemini left negative feedback because they couldn’t “ghibli” their photos, showing how fast cultural memes reshape what people think these tools should be able to do.

HBR pulled from public forums like Reddit and Quora. Those spaces are great for variety and narrative, but they’re also performative. My input was product feedback at scale. Same question, different inputs and that’s why the outputs diverge. Taken together, you get a fuller picture: companion use is real but concentrated; practical use; knowledge, learning, productivity dominates elsewhere.

What people really want

Across models, the center of gravity is simple: People treat LLMs like teachers, guides, and tools.

- Knowledge access is the “ask a question, get an answer” behavior we’ve all been doing for decades. It’s quick, decisive, and increasingly, LLMs are the first stop for this sort of interaction..

- Learning is more intentional: tutoring, step-by-step help, skill-building. When LLMs seem to know everything they can teach you anything.

Increasingly, this is where the tension lives. Teachers lament the death of homework. Consultants fear prompts replacing expensive processes they’ve built their careers on. And researchers fear what a world looks like when all knowledge is truly at your fingertips. And there’s credible reporting across the globe that AI is placing a strain on already fragile education systems.

At work, those who use LLMs seem to fall into two camps. Those confident in their own abilities and others who rely on AI to do everything for them. A recent study from Microsoft/Carnegie Mellon demonstrates how LLMs are already affecting critical thinking in the work place. Recent papers from Harvard and MIT tell us that these effects are likely already inhibiting children who’d rather take shortcuts than spend extra effort on homework.

In the reviews I analyzed, though, I didn’t see much evidence of shortcuts or avoidance. What I saw was eagerness. People picking up new skills, trying new things, asking “dumb” questions they might never ask a person. The results we have aren’t indicative of how things will play out in the future. But, they do seem to counteract the idea that LLMs are the deathknell of education or already taking over human-to-human interactions as previous research on the same subject has led us to believe.

How to explore the data

The workbook’s built to be explored, not skimmed. You can see it here. Start with the seven main categories, then filter by app or timeframe to see how behaviors shift, like the spike in creative use after ChatGPT’s image model launch. You can stack apps side by side or split the view by platform to see where usage lines up and where it diverges.

Each category also has its own detail page. That’s where the subcategories live; friendship vs. romance inside “Companionship,” or tutoring vs. skill-building inside “Learning.” I included sample reviews alongside the raw lists so you can see how the labels hold up in context.

This analysis won't be perfect, but it is important to continue to research and understand how AI affects us and continues to change how we interact with the world. We do that by being transparent about the information we have. I wanted to give you a way to test your own assumptions against the data like I did when I first read the HBR review.

And, it's with that same lens I'm thinking about what comes next.

The questions I’m asking

What stands out after digging through hundreds of thousands of reviews isn’t just how people use LLMs today, but how quickly those patterns shift. The workbook shows that usage isn’t static—it bends with cultural trends, product launches, and platform design choices. And that volatility raises bigger questions about where this all goes from here.

- App-by-app specialization: The divide between “generalist chatbots” and “companion products” will keep widening. Particularly, how will companies like Meta and ChatGPT continue to treat their roles of introducing LLMs to ever younger audiences?

- Confidence vs. capability: As models improve, will users continue down the path of blind obedience or pushback and try to use their own minds more often?

- Access for Everyone: I’m a firm believer that we’ve already reached a point where someone with access to an LLM can easily outperform someone without. How will companies and governments deal with access as costs continue to rise?

The recent launch of GPT-5 and a smattering of news stories make it clear that AI companionship is a real situation we’ll be forced to contend with. But, for the vast majority of people, those who aren’t actively seeking relationships with LLMs, AI is still a tool best used for getting answers, learning something new, and moving work forward.